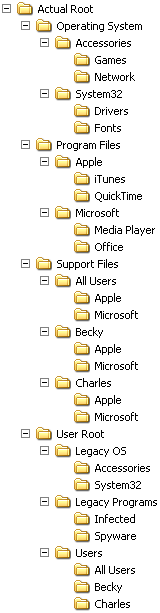

The Solution

© Charles ChandlerWhat is needed is a system that is designed to not require that the user perform some tasks as administrator, and that simply does not have vulnerabilities as a consequence.OS FilesThe first door that needs to be closed is access to operating system files, including device drivers. The OS should simply not allow the user, or any application other than OS utilities, to be able to change anything with regards to the OS. Device driver vendors should coordinate with the OS vendor to get their software installed, updated, and patched using built-in OS capabilities. This, of course, puts the responsibility on the OS vendor to make sure that device drivers are fully tested before being automatically installed on the user's machine. But considering what's at stake, and how unqualified the user is in evaluating device drivers, this is not unreasonable. Apple has exercised a great degree of control over the release of device drivers for the Macintosh OS, and this has been one of the central reasons for the robustness of the Mac. First, bad drivers are rarely released to the public, because Apple makes sure that they're tested. Second, Apple knows what drivers are out there, because these drivers have to be reviewed by Apple before they are released. So the testing effort can be effective. Third, Apple doesn't have to grant users access to this part of the OS, which means that users can't screw it up, and viruses can't change these files maliciously.In short, the only people who should ever be able to screw up the OS should be the OS developers themselves. Everyone else, including users and third-party applications, should simply not have access to OS-level files. To gain access to OS files, users should have to log on as "manufacturer," which will grant them full rights to everything on the machine. There should never be a reason to use this account unless the person is doing OS or device driver development.Program FilesThe second door that needs to be closed is that one application should not be able to change the files associated with another application. When a well-behaved installer runs, instead of asking the user where the application should be installed, the installer should make a request to the OS for space within a protected area on the hard-drive, into which it can install its files. Along with this request, it will send the OS the name of the executable that it intends to expose to the user. The OS will then create and subsequently manage the hook to that executable, and that hook will be the only way that the user will be able to launch the executable. After installation, outside access to the protected application folder (except via the hook that was registered) will be closed. From this point onwards, no user, nor any other application run by the user, will be able to see or modify the files within this application folder.The protected application itself will, of course, be able to read and write files within its protected area, and could potentially provide an interface for the user to see and modify protected files. This would probably be poor application design, but the job of the OS is not to eliminate crappy apps. Rather, it is merely the job of the OS to protect itself from user and app error, and to protect well-behaved apps from malicious apps.Note that once an application has been installed into a protected application folder, the only way for the user to execute that application will be via the hook that was registered at installation time. This has several implications.First, deleting the hook would effectively un-install the software, since the app would never be accessible again. Therefore, deleting an application hook should be the way that software gets uninstalled, and this should be perfectly clear to the user. It's possible that deleting an application hook should not immediately uninstall the software, but rather, should simply move the hook to an "Unused Applications" folder, from which it can be retrieved later if the user changes his/her mind, or from which it can be deleted later if the user needs to free up some space on the hard-drive. Either way, the significance of deleting an application hook should be extremely obvious.Second, updating an existing application will have to be accomplished using an existing hook, because only a registered application will have the rights necessary to update files within its protected application folder. To reduce the resultant hardship on the lower tier of developers, the OS vendor should provide an updater mechanism that application developers will be able to integrate easily into their applications. The OS vendor should provide an update system that includes a way of creating and posting compressed and encrypted update files to an FTP server, along with the calls necessary to query the server for updates, download just the files that are needed, and move those files into place during the next application launch. The OS vendor should also provide roll-back capability, in case the application developer releases a bad patch.Third, applications that support third-party add-ons will have to provide a mechanism for those add-ons to be installed.Applications that are not capable of operating effectively within these constraints will not be eligible for installation as "protected" applications.Users logged on as "programmer" will have full rights to all of the protected application folders (but will not have "manufacturer" rights within the protected OS folder). There should never be a reason to use this account except when doing application development where it is important to run the application from its eventual location within a protected application folder. Of course, when logged on as "programmer" it won't be protected, but the app will still be making calls to system services that might make assumptions about the location of the app.Support FilesAs a convenience to system administrators, there should be a "support files" area outside of the protected application folders, where applications can store runtime-generated data. If the user logs in as "administrator," all of these data folders will be visible and modifiable. Each application, when it registers for protected space, will also be granted rights to a corresponding data folder. Only that application, or users logged on as administrator, will have access to the data folder. This way, rogue apps will not be able to corrupt the data files of another application. It will be the responsibility of the application developer to make sure that administrators will be able to effectively work with data files, such as copying them to other machines to transfer configuration settings and user-generated application support files.User FilesOutside of the protected OS, application, and data folders, there should be a fourth area on the hard-drive for user files. This area will be completely unprotected, except in the sense that other user accounts on a multi-user machine will not have rights to the current user's files.This, of course, means that malicious software will still have access to the user's files, and will be able to delete, corrupt, and/or steal anything there. Malware could also plant viruses in documents used by applications that support scripting (such as MS Office), and those scripts will then have access to everything within the application's folder. It will also still be possible for the user to install rogue apps into the user area, and these rogue apps will have the same network rights as the user, and they will still be able to access the Internet. So the architecture described above will not eliminate all of the problems associated with user error and with malicious software. But it will guarantee that the OS will be untouchable, and that well-behaved apps, including their support data, will be vulnerable only to themselves by their own design. This will so greatly reduce the footprint of user error and rogue app problems that the remaining issues will be much easier to manage.